The best of times

Conference interpretation is a field that has long profited from innovation – reaping the benefits of advances in technology, culminating in the state-of-the-art conference rooms at the UN. The goal of these innovations has always been to optimize the quality of the meetings we hold. A crucial part of this quality is interpretation, which requires the best possible audio feed to the interpreters. The nuts and bolts of simultaneous interpretation have not changed over time: we must be able to hear, understand, speak and monitor our output simultaneously – and for that we need the highest possible quality audio.

The no longer normal

When the COVID-19 pandemic started, given the urgency of the situation, Remote Simultaneous Interpretation (RSI) platforms were introduced quickly, without time to thoroughly test out the new technology, or to fully consider the effects it would have on interpreters.

However, after working with RSI for two years, we have seen that the quality of the sound we receive from remote participants via the platforms is simply not up to the standard we need in order both to do our job to the best of our ability and to protect our hearing.

As we explained in a previous UN Today article, both the platforms and peripherals are responsible for the poor quality of sound received – sound that falls far short of the relevant ISO standards and that may justly be qualified as toxic, i.e., can damage hearing.

There are three main problems:

1. Loss of audio frequencies

For the purposes of simultaneous interpretation, access to frequencies up to 15000 Hz is essential: this enables interpreters to localize sound, compensating for the fact that they are speaking whilst listening, and to distinguish between different sounds and phonemes (‘f’ and ‘s’; ‘sh’ and ‘ch’, for example).

To illustrate the point, this is what a normal adult male voice looks like when not transmitted through a platform:

FIGURE 1

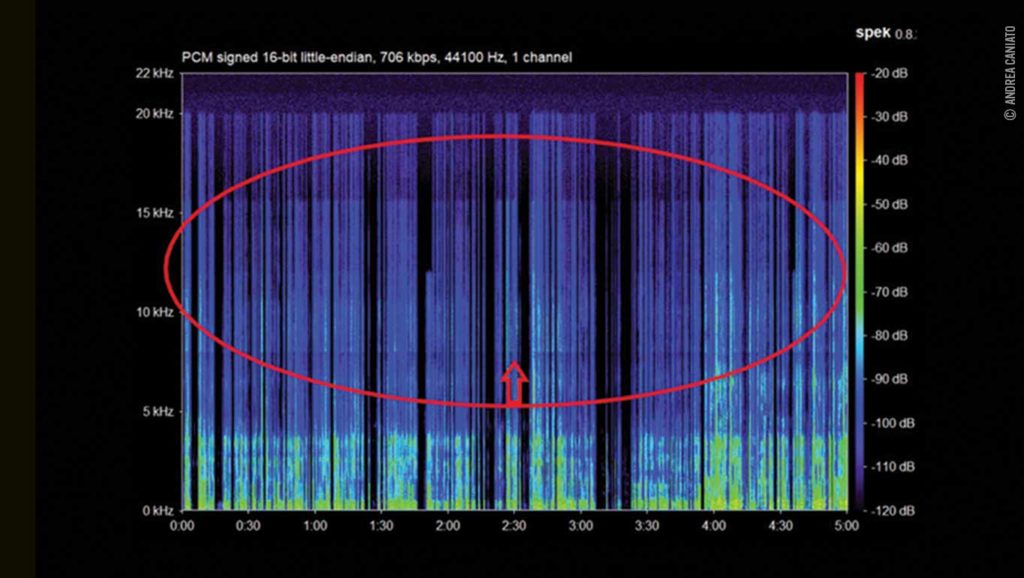

Green represents audible sound, and it consistently reaches 10000 Hz; there are many peaks reaching and even exceeding 15000 Hz. But when the same voice is transmitted through a well-known RSI platform, nearly all of the audible sound is concentrated below 4000 Hz:

FIGURE 2

This failure to deliver the full range of frequencies needed was confirmed in a recent UN Geneva test of seven platforms measuring ISO compliance. They all failed.

And it is not just the platforms that cut key frequencies: so do laptop microphones, earpods, earbuds and many, if not most, models of headsets.

2. Low signal-to-noise ratio

This is a feature both of the narrow band audio that is the result of this frequency cutting, and of the generally poor quality of the original audio, caused by, inter alia, use of omnidirectional microphones that pick up all background noise; poor internet connections; reverberation caused by, e.g., high ceilings; and interference from notifications on phones and computers. In other words, the sound isn’t intelligible, especially when we are speaking over it, and the best we can do to compensate is turn up the volume. But, with the toxic sound inherent to RSI, this can be harmful.

3. Aggressive dynamic range compression

RSI platforms, as well as many headset models, manipulate the sound they transmit in a process known as ‘dynamic range compression,’ an integral part of their model. The underlying theory seems benign: to even out volume levels to achieve a more uniform sound.

The result, however, is no respite from increased sound pressure levels in those frequencies being transmitted, frequencies in ranges to which the inner ear is particularly sensitive; middle ear defensive reflexes are quickly overwhelmed and disabled, leaving the delicate inner ear exposed to the full range of poor-quality audio described above, plus sudden surges in volume.

This phenomenon was investigated in a recent study from the University of Clermont-Auvergne involving guinea pigs, which have very similar inner ear anatomy to humans. After exposure to compressed sound, the guinea pigs suffered auditory fatigue and weakened middle ear defenses. The study’s author calls for a heightened awareness of the dangers compressed sound likely poses to human auditory health.

So far at UN Geneva, the negative effects have mostly been seen among interpreters: In the past year, some 60% of them have reported suffering various symptoms (tinnitus, hyperacusis, pain in the ear, vertigo, dizziness) after interpreting via RSI platforms.

An end to complacency

For interpreters, this innovation has brought a disastrous decline in working conditions. Given the current limitations of the technology, our best hope of an improvement lies in a swift return to fully in-person meetings. Where this is not possible, in the immediate term participants will need to be convinced to adhere strictly to equipment requirements in order to mitigate RSI’s negative impact on our health.

It is time to take urgent action to shape the tools we use, rather than simply rely on what is available: platforms must change their transmission mode and develop a format that meets the relevant ISO standards, eliminates aggressive dynamic range compression, and does no harm. Where health and working conditions are concerned, innovation must not lead to deterioration.