It goes without saying that the COVID-19 pandemic has radically changed our way of working. Most notably, meetings have moved online. However, over the last year it has become unfortunately apparent that the Remote Simultaneous Interpreting (RSI) platforms present a particular set of difficulties for interpreters. The root of all the difficulty is the incredibly subpar quality of the audio.

Previously, this wasn’t a problem during in-person meetings, primarily because the conference rooms at UNOG are professional settings, designed and equipped according to the standards required for conference interpreting – to ISO standards. Each speaker has a unidirectional microphone directly in front of them which picks up only the sound of their voice, and which delivers the full range of audio frequencies that simultaneous conference interpreters require to do their jobs.

By contrast, the built-in computer microphones that participants most often use in remote meetings are a very different beast. They are designed for convenience rather than transmission of high-quality audio: for example, in order to allow you to move around freely during your meeting, they pick up sound coming from anywhere in the room – in other words they are omnidirectional. Unfortunately, this has a negative effect on the quality of the sound they deliver.

As an alternative, participants frequently resort to the type of earphones that come with their smartphone (be these wired or wireless), expecting that they will provide somewhat better sound, but unfortunately this is rarely the case – not least because they are only capable of delivering one fifth of the audio frequency range that interpreters require, in some cases limiting them to the same range that is transmitted by telephone lines. This has a major impact on the intelligibility of speech – we all know how people’s voices sound different over the phone. As with computer microphones, they are omnidirectional. They are also positioned too far from the speaker’s mouth, so their voice is too faint. With wireless earbuds, the microphone is positioned to the side of your face, so your voice will verge on the inaudible. With wired earphones the microphone is not in line with your mouth, making your voice too faint. Moreover, it frequently bangs on the desk or is interfered with by the speaker, which creates sudden surges in volume that are dangerous for interpreters.

Participants are often unaware of the omnidirectional nature of the microphones they are using, so they participate from settings that are far too noisy. They rarely pick a small, quiet room where they are alone and where the windows and doors are closed – which is the ideal scenario. Other participants may hardly even notice the background noise, but for the interpreter, who is focusing intently on the speaker’s voice and straining to understand every word they are saying, sudden, jarring noises can be extremely painful and dangerous.

The problems with microphones and setting are compounded by the issue of the internet connection, which is crucial given that the audio and video feed in a remote meeting is transmitted to participants via the internet. Unfortunately, internet speed and connection are notoriously unpredictable. When the speed suddenly dips, the signal cuts out and entire words or parts of sentences can be distorted or lost completely. There can also be significant fluctuations in volume level as a result.

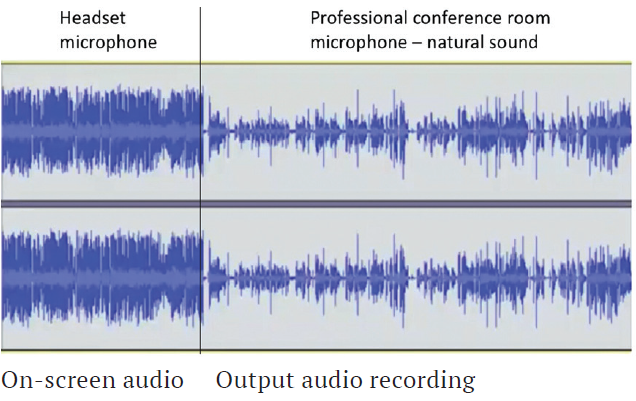

In order to make adjustments for the wide range of different technical setups being used and attempt to improve the experience for listeners (although not interpreters), the RSI platforms use various types of digital sound processing. For instance, they try to equalize volume levels between different speakers in order to avoid large fluctuations, but this has the effect of increasing the sound pressure to which listeners and interpreters are exposed, which is harmful. This image illustrates the difference in sound pressure between a voice which has been compressed (left) and one which has not (right).

This is only one example of the types of processing which the platforms apply. Unfortunately, the end result is sound that is over-processed, unnatural, and incoherent, especially for interpreters, who are speaking at the same time as they are listening.

In addition, the platforms generally transmit a much more limited range of frequencies than the microphones in the conference rooms, which has a major impact on the intelligibility of speech. For instance, with a very limited frequency range it becomes impossible to distinguish between the sounds ‘s’ and ‘f’. For interpreters, who must hear every word clearly in order to be able to interpret a speaker’s message completely and accurately, this can pose a major problem.

This poor quality sound forces interpreters to turn up the volume much higher than we normally would (although still not to a level that would, in normal circumstances, be considered unsafe and unacceptable) in order to be able to interpret anything of the message we are receiving, so we are exposed to unnatural and incoherent sound at higher than average volume, with dangerously high sound pressure levels for sustained periods of time.

All of this is coming at a cost to our health. After only a few months of working in remote meetings, many interpreters have started to experience auditory disorders we had never experienced before, and these have only gotten worse over time. The symptoms include tinnitus (a ringing in the ear), ear and jaw pain, hypersensitivity to all sound, severe headaches, and dizziness. Several interpreters suffering from these symptoms have been on sick leave for several months and are unsure if they will ever be able to return to the booth. Others have already been told by doctors that their symptoms are permanent.

This cluster of symptoms is generally thought to arise due to the excessive contraction of muscles in the middle ear. The function of these muscles is to protect the inner ear from any damage that could cause permanent hearing loss. They contract in response to loud or unexpected sounds, which are perceived by the auditory system as being threatening and potentially harmful. Therefore, as long as the current harmful sound continues to be delivered, interpreters will continue to suffer from these symptoms, and over time more and more interpreters will run the risk of long-term, possibly permanent, injury.

DCM has begun taking steps to improve sound quality to the greatest extent possible whilst we are still relying on the current RSI platforms. For instance, they recently gave Interpretation Service the green light to film a series of short videos explaining to remote participants the problems caused by unsuitable equipment, connection, and setting, and what they should be doing instead.

The challenge will be ensuring that every single remote participant successfully meets the minimum criteria for equipment and setting. These criteria will need to be mandatory. Otherwise, the interpreters will continue to be exposed to a serious risk that could potentially rob them of their livelihoods. However, correct equipment use by participants will make little difference if the UN continues to use RSI platforms that deliver degraded audio and that fail to meet ISO standards. Should the organization see value in an RSI tool for business continuity beyond the current ad hoc COVID-19 setup, then attention needs to shift immediately to researching an ISO-compliant solution. This research process must be led by the key stakeholders – chief among them the interpreters, but also colleagues from A/V, IT, and Conference Services inter alia. It must be understood that such a process must be thorough and may therefore take time, but we must not rush into another solution that will actively harm colleagues.